Photo by Nathana Rebouças on Unsplash

How to make Custom Search Tool using Microsoft Guidance and OpenAI

Guidance is becoming my go-to for LLM product development. This new language developed by Microsoft to control Large Language Models lets you design a conversational prompt intuitively.

In this blog, we will look into how to create a Search Tool in Guidance to give LLM the ability to incorporate the latest data from the internet.

Defining the prompt

First, we will define the prompt.

{{#system~}}

You are a helpful assistant.

{{~/system}}

{{#user~}}

From now on, when asked a question convert it into a search query

and wrap it as such: <search>query</search> before responding.

I will then paste web results in, and you can respond.

{{~/user}}

{{#assistant~}}

Ok, I'm ready.

{{~/assistant}}

{{#user~}}

{{user_query}}

{{~/user}}

{{! This part will make sense later!}}

{{#assistant~}}

{{! Convert the provided question to a search query}}

{{gen "query" stop="</search>"}}

{{#if (is_search query)}}

</search>

{{/if}}

{{~/assistant}}

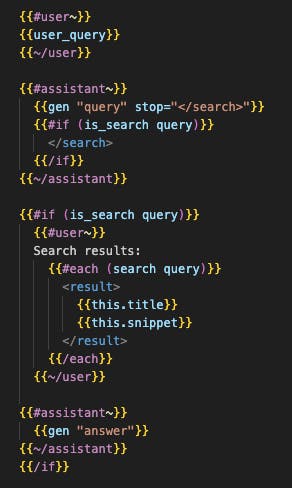

{{#if (is_search query)}}

{{#user~}}

Search results:

{{#each (search query)}}

<result>

{{this.title}}

{{this.snippet}}

</result>

{{/each}}

{{~/user}}

{{#assistant~}}

{{gen "answer"}}

{{~/assistant}}

{{/if}}

Many people have reported that ChatGPT returns a better answer through a conversation, rather than asking one question. This technique is called Chain-of-Thought (CoT) and it has been recently shown theoretically that by using CoT you are virtually increasing the depth of the neural network and thus allowing LLM to solve more complex problems. (For those interested in the paper: Towards Revealing the Mystery behind Chain of Thought: a Theoretical Perspective).

Guidance's feature to easily design a conversation between User and Assistant in a single prompt will be crucial in developing a high-quality prompt.

Also, the template language is loosely based on Handlebars so I like to save it as main_prompt.handlebars. This way you can benefit from colour highlights on VSCode (until someone makes a Guidance extension!).

Also, I like to define the prompt in a separate file and define a class that will read the file from a path and initialize the guidance object as such:

import guidance

class SearchGPT:

def __init__(self):

self.embedding_model = "gpt-3.5-turbo"

self.assistant = self.__init_assistant__()

def __init_assistant__(self):

guidance.llms.OpenAI.cache.clear()

llm = guidance.llms.OpenAI("gpt-3.5-turbo")

# load prompt here

with open('./prompts/main.handlebars', 'r') as f:

return guidance(f.read(), llm=llm)

Creating Search tool

The beauty of Guidance is that you can define a Tool in the same fashion you would define a Python function. LangChain tends to be too encapsulated without much space for customization. I find Guidance's level of low abstraction a better fit for production.

Now let's create the search tool. We use SerpAPI to send query to Google Search.

import requests

import json

def gsearch(search_term):

url = "https://google.serper.dev/search"

payload = json.dumps({

"q": search_term

})

headers = {

'X-API-KEY': 'YOUR_API_KEY',

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

return response.json()

Now let's use gsearch() and define search method to the SearchGPT class we defined earlier along with some helper functions.

import guidance

from tools import search

class SearchGPT:

def __init__(self):

self.embedding_model = "gpt-3.5-turbo"

self.assistant = self.__init_assistant__()

def __init_assistant__(self):

guidance.llms.OpenAI.cache.clear()

llm = guidance.llms.OpenAI("gpt-3.5-turbo")

# load prompt here

with open('./prompts/main.handlebars', 'r') as f:

return guidance(f.read(), llm=llm)

# new from here

async def run(self, query):

res = await self.assistant(

search=self.search,

is_search=self.__is_search,

user_query=query,

async_mode=True

)

return res

def search(self, query):

return self.__top_snippets(query, n=5)

def __is_search(self, completion):

return '<search>' in completion

def __top_snippets(self, query, n):

query = query.replace("<search>", "")

top_snippets = []

data = search.gsearch(query)

organic_data = data.get('organic', [])

for item in organic_data[:n]:

title = item.get('title')

snippet = item.get('snippet')

new_item = {}

if title:

new_item['title'] = title

if snippet: # only append if snippet is not None

new_item['snippet'] = snippet

top_snippets.append(new_item)

return top_snippets

If we go back to the prompt, you can see how those methods will come into play.

Putting everything together

I've made a simple API using FastAPI.

from fastapi import FastAPI

from pydantic import BaseModel

from assistant import SearchGPT

app = FastAPI()

searchGPT = SearchGPT()

class Query(BaseModel):

question: str

@app.post("/answer")

async def run_program(query: Query):

question = query.question

print("---")

print("Question: {}".format(question))

if question == "":

return {"answer": "Please ask a question"}

ans = await searchGPT.run(question)

print("""\nAnswer: {}\n""".format(ans["answer"]))

print("---")

return {"answer": ans["answer"]}

Results

Question: When is WWDC?

Question: When is WWDC?

<|im_start|>system

You are a helpful assistant.<|im_end|>

<|im_start|>user

From now on, when asked a question convert it into a search query and wrap it as such: <search>query</search> before responding. I will then paste web results in, and you can respond.<|im_end|>

<|im_start|>assistant

Ok, I'm ready.<|im_end|>

<|im_start|>user

When is WWDC?<|im_end|>

<|im_start|>assistant

<search>When is WWDC?</search>

<|im_end|>

<|im_start|>user

Search results:

<result>

WWDC 2023: June 5 Keynote – Headset, iOS 17, and More - MacRumors

Apple's 34th Worldwide Developers Conference will take place the week of June 5 to June 9, 2023, beginning with a keynote event that is set to ...

</result>

<result>

WWDC 2023: Everything you need to know - Macworld

WWDC 2023 starts on Monday, June 5 with a keynote event that will start at 10 a.m. in California. If you are wondering what time will the WWDC keynote start ...

</result>

<result>

Apple's Worldwide Developers Conference returns June 5 - Apple

CUPERTINO, CALIFORNIA Apple today announced it will host its annual Worldwide Developers Conference (WWDC) in an online format from June 5 ...

</result>

<result>

WWDC 2023: Here's everything to expect at Apple's special event this year

WWDC 2023 will kick off on Monday, June 5. Apple will open the week-long event with a special keynote on June 5 at 10 a.m. PT/1 p.m. ET. During ...

</result>

<result>

WWDC 2023 dates, iOS 17, Apple AR/VR headset and more | Tom's Guide

Apple has announced that WWDC 2023 will kick off June 5 and run through June 9. There will be a "special experience" for developers and students in person at ...

</result>

<|im_end|>

<|im_start|>assistant

According to the search results, WWDC 2023 will take place from

June 5 to June 9, 2023, and will begin with a keynote event on

June 5 at 10 a.m. PT/1 p.m. ET.

<|im_end|>

Question: How much is SpaceX worth today?

Question: How much is SpaceX worth today?

<|im_start|>system

You are a helpful assistant.<|im_end|>

<|im_start|>user

From now on, when asked a question convert it into a search query and wrap it as such: <search>query</search> before responding. I will then paste web results in, and you can respond.<|im_end|>

<|im_start|>assistant

Ok, I'm ready.<|im_end|>

<|im_start|>user

How much is SpaceX worth today?<|im_end|>

<|im_start|>assistant

<search>What is the current valuation of SpaceX?</search>

<|im_end|>

<|im_start|>user

Search results:

<result>

SpaceX Reportedly Raising $750 Million In Fresh Funding At $137 Billion Valuation

Elon Musk's commercial rocket company SpaceX is raising $750 million in its latest funding round at a valuation of $137 billion, ...

</result>

<result>

SpaceX Raising $750 Million, Valuing it at $137 Billion - Investopedia

Elon Musk's SpaceX will seek to raise $750 million in a new funding round that values the company at $137 billion. SpaceX raised $2.2 billion ...

</result>

<result>

SpaceX to raise $750 million at $137 billion valuation - CNBC - Reuters

Jan 2 (Reuters) - Elon Musk's SpaceX is raising $750 million in a new round of funding that values the rocket and satellite company at $137 ...

</result>

<result>

SpaceX Shooting For $750M Round At $137B Valuation — Report - Crunchbase News

Hawthorne, California-based SpaceX raised $1.68 billion in June 2022 that valued it at more than $125 billion. The space startup quickly ...

</result>

<result>

SpaceX raising $750 million at $137 billion valuation, a16z investing - CNBC

The company raised more than $2 billion in 2022, including a $250 million round in July, and was valued at $127 billion during an equity ...

</result>

<|im_end|>

<|im_start|>assistant

According to recent reports, SpaceX is currently raising

$750 million in a new funding round at a valuation of

$137 billion.

<|im_end|>

You can see that Assistant was able to make use of search results to answer User's question. This was a simple example but by building on top of this, it is possible to give much more complex problem-solving abilities to LLM.

P.S.

At Seeai, we help LLM-powered software development. For any questions contact us here!